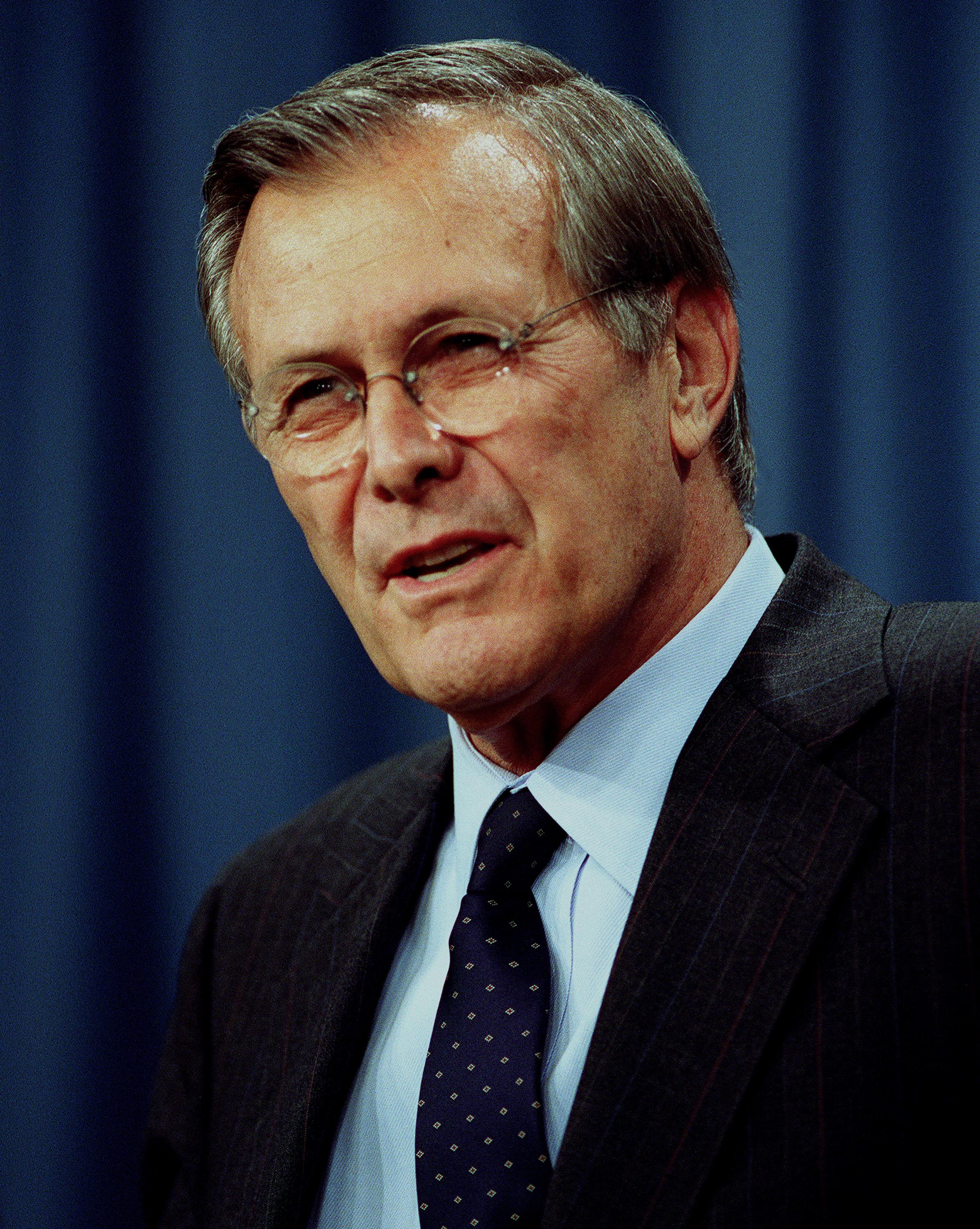

"There are known knowns; there are things we know that we know. There are known unknowns; that is to say, there are things that we now know we don't know. But there are also unknown unknowns - there are things we do not know we don't know."

"There are known knowns; there are things we know that we know. There are known unknowns; that is to say, there are things that we now know we don't know. But there are also unknown unknowns - there are things we do not know we don't know."

-Former US of Defense, Donald Rumsfeld

This infamous quote left some people befuddled, others impressed. While this quote emerged from the context of linking the government of Iraq with weapons of mass destruction, uncertainty (everything but the known knowns) pervades the world of evaluation, social impact assessment and performance measurement. Still, we acknowledge it in a fairly limited way, and dealing with it explicitly is relatively rare. This limited treatment produces a misplaced concreteness in what we do and reduces its usefulness. Where does uncertainty lurk in our work, and what can we do to better take it into account?

We are experts at turning grey into black and white

In our world, it is common to translate imprecise and vague notions around performance and impact to a set of numerically crisp values, giving us a false notion of precision and validity. For instance, a typical measure of service quality may be ‘the # of customer complaints/time period’. But in this example alone there can be many elements of uncertainty. There can be measurement error related to the procedure to collect the complaints; imprecision in the indicator as a measure of product quality (is it a trivial or serious complaint? In a given time period, do you have more challenging customers who complain, that does not reflect a change in product quality?).

Things get more complicated when we move past elaborating only one indicator, to multiple indicators that are to reflect broader organizational performance and outcomes. In this case we need to acknowledge uncertainty in how the indicators (alone or as part of an aggregate measure or mathematical model) characterize what is actually important in the real life system- bearing in mind that any model can only be an approximations of reality.

Further to this is the uncertainty of key parameters – both in some cases there is uncertainty where some parameters are essentially unknown ( for instance, parameters of attribution, drop-off, displacement and dead-weight in SROI analysis), or where there is considerable variability in a parameter, but it is represented deterministically.

Embracing the grey:

- Acknowledge and document uncertainty and interpret measures appropriately

Metrics are often presented with an aura of analytical black and white rigour, which may be misplaced given the degree of parameter uncertainty and model limitations. As George E.P. Box, a British mathematician and a pioneer in quality science, points out, “ Remember that all models are wrong; the practical question is how wrong do they have to be to not be useful.” Understanding uncertainty is key to framing how performance measures, SROI ratios, and evaluation results should be interpreted and applied to their best results. Our major challenges in this respect are to not:

- isolate indicators and model results from documentation about how it was calculated, why it was calculated , and key caveats around context and interpretation.

- Use indicators for the purpose of tracking accountability and making comparisons where uncertainty is significant.

Fundamentally, our work should be used to spark learning and critical thinking, and to ultimately foster better decisions. If a calculation is quite uncertain, it isn’t appropriate to attach accountability and comparability.

- Build uncertainty explicitly into calculations

The field of quantifying uncertainty is well established and growing. Here are few of methods that may be relevant to your work:

- Sensitivity Analysis involves looking at how the uncertainty in the output of a mathematical model can be apportioned to different sources of uncertainty in its inputs. Typically, ranges of input values are tested relative to their impact on the model results.

- Stochastic models involves using probability distributions for input variables, rather than a single best guess. Methods such as Monte Carlo simulations can then be used to propagate the uncertainty to result variables, a step which can be accommodated by Excel quite easily. (An alternative to stochastic, probability-based models is Fuzzy Logic models)

- Bayesian statistics express likelihoods that different parameters explain observed data and allows you to combine data with existing knowledge or expertise.

Recommended Read:

Millett Granger Morgan and, Max Henrion , 1992

Uncertainty: A Guide to Dealing with Uncertainty in Quantitative Risk and Policy Analysis

This is an older, but pretty useful book for laying out the issues and approaches to addressing uncertainty. It was the key text for the course I took on risk assessment and decision analysis at SFU many moons ago.